blog · git · desktop · images · contact

Why octal notation should be used for UTF-8 (and Unicode)

2016-10-05

Disclaimer: I read about this topic in another blog first, but I just can’t find that posting anymore. If you, the “original author”, happen to read this, drop me a note and I’ll add a link to your posting.

Now. It’s 2016 and I finally took the time to write a very basic UTF-8 encoder and decoder. This is an important thing that, IMHO, anyone should do to get a better understanding of what’s going on.

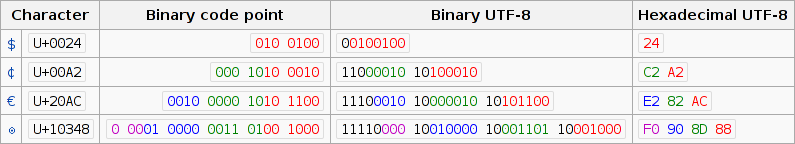

When you start reading up on the topic, you quickly come across notations like this one from Wikipedia:

Hexadecimal notation. Of course, why not, hex notation is used in many places when dealing with “raw” data. It’s common practice. Sadly, when talking about UTF-8, it makes everything more complicated. Let’s have a quick look at how UTF-8 works – a very quick look.

In UTF-8, there are “leading bytes” and “continuation bytes”. A leading byte tells you “a multibyte sequence begins here”. Some continuation bytes follow immediately. Together, they can be decoded and you’ll get one Unicode code point.

One such multibyte sequence looks something like this:

0xxxxxxx: Highest bit not set, we’re in the ASCII range. Nothing to decode, no continuation bytes follow.110xxxxx 10xxxxxx: Leading byte and one continuation byte.1110xxxx 10xxxxxx 10xxxxxx: Leading byte and two continuation bytes.11110xxx 10xxxxxx 10xxxxxx 10xxxxxx: Three continuation bytes.

Actually, that’s all possible options. In theory, UTF-8 can have sequences of 8 bytes, but RFC 3629 restricts it to a maximum of 4 bytes.

So, the x’s above can be arbitrary bits. They specify the Unicode code point. (Let’s ignore that some code points are not “allowed”.) Basically, a code point is an abstract number. Write that number in binary and copy the bits into the x’s. If the code point is a small number, you need just a few bytes (or only one byte) – if it’s a large number, you need more bytes.

Now, look closely. All continuation bytes always carry 6 bits of

information. In octal notation, those 6 bits are exactly two digits. The

leading bits 10... will always be a leading 2 in octal.

You can do something similar with the leading bytes. If they start with

110... or 11110..., your octal number will start with 3 or 36

and the other digits of that octal number are part of the code point.

The 1110... is a little more tricky, but more on that below.

What’s all that good for? Well, when reading UTF-8 encoded data in octal notation, you can get the Unicode code point without any decoding process. Some examples:

-

German umlaut “ö”, octal Unicode code point is 366 (hex

<U+00F6>):$ printf ö | od -t o1 0000000 303 266 ^^ ^^ -

Euro sign “€”, octal Unicode code point is 20254 (hex

<U+20AC>):$ printf € | od -t o1 0000000 342 202 254 ^ ^^ ^^ -

Asian glyph “灀”, which I sadly don’t know the meaning of, octal Unicode code point is 70100 (hex

<U+7040>):$ printf 灀 | od -t o1 0000000 347 201 200 ^ ^^ ^^ -

“Thumbs up”, octal Unicode code point is 372115 (hex

<U+1F44D>):$ printf 👍 | od -t o1 0000000 360 237 221 215 ^ ^^ ^^ ^^

There’s one pitfall, though. If you’re dealing with a 3 byte sequence

and the code point is above hex <U+7FFF>, then you have to take the

second octal digit into account as well – kind of. For example:

-

“キ” has an octal code point of 177567 (hex

<U+FF77>) and it goes like this:$ printf キ | od -t o1 0000000 357 275 267 !^ ^^ ^^That “57” has to be read as “17”.

So, it all boils down to this:

- If you see an octal byte beginning with 0 or 1, it’s plain ASCII: “A” = “065” or “o” = “111”.

- If you see an octal byte beginning with 2, it’s a continuation byte. You can ignore the “2” and just use the remaining two digits.

- If it begins with 3, 34 or 36, it’s a leading byte and you can directly use the remaining digit(s).

- If it begins with 35, it’s a leading byte as well, but you have to add a single “1”: In “357”, “17” is part of the code point.

- Or, if you don’t care that much: If it starts with a 3, it’s a leading byte.

These few simple rules should make it pretty easy to spot the UTF-8 multibyte sequences in the following dump and you can even “decode” them:

$ od -t o1 <data

0000000 124 150 141 164 342 200 231 163 040 141 156 040 145 170 145 155

0000020 160 154 141 162 171 040 144 165 155 160 056 040 342 200 234 110

0000040 141 154 154 303 266 143 150 145 156 054 342 200 235 040 150 145

0000060 040 163 141 151 144 056 012

If the convention of writing Unicode code points was an octal

<U+372115> instead of a hexadecimal <U+1F44D>, that would be really

nice. Sadly, that’s not the case. Of course, nobody knew that UTF-8

would become the encoding for Unicode, so you can’t blame people.

Still, when reading octal dumps, you can easily grab the digits of the code point in octal and then just convert it to hex and you’re done. And if you’re not interested in “decoding” anything at all, just knowing about these simple rules above can be helpful: It’s trivial to distinguish bytes starting with 3 or 2 from the rest – and, bam, you know that that’s UTF-8. That’s much more complicated to do in hex.